Balancing Innovation and Childhood: The Ethical Side of AI in Elementary Education

Summary:

In a classroom filled with curious faces and wide-eyed children, the hum of learning is timeless. Yet now, in that very classroom of the 21st century, something else has crept into view: the promise of artificial intelligence (AI) tools poised to transform how children learn, how teachers teach, and how schools operate.

The topic of AI in Elementary Education is no longer science fiction or an optional add-on; it’s becoming mainstream.

But as we adopt this wave of innovation, a critical question arises: how do we balance this technological surge with the essence of childhood itself?

On one hand, AI promises to personalise learning, adapt to each child’s pace and style, free up teacher time for deeper engagement, and address long-standing gaps in education.

On the other hand, there lies an ethical minefield: the risks of data misuse, algorithmic bias, diminished creativity, over-surveillance, and compromised child development.

The goal becomes not merely to deploy AI but to deploy it responsibly.

This means asking profound questions: How does childhood development interact with algorithmic systems?

Can a child’s sense of autonomy, curiosity, and emotional growth thrive under AI-driven cues? How can we ensure that the tools, rather than children, are shaped by ethical pedagogy?

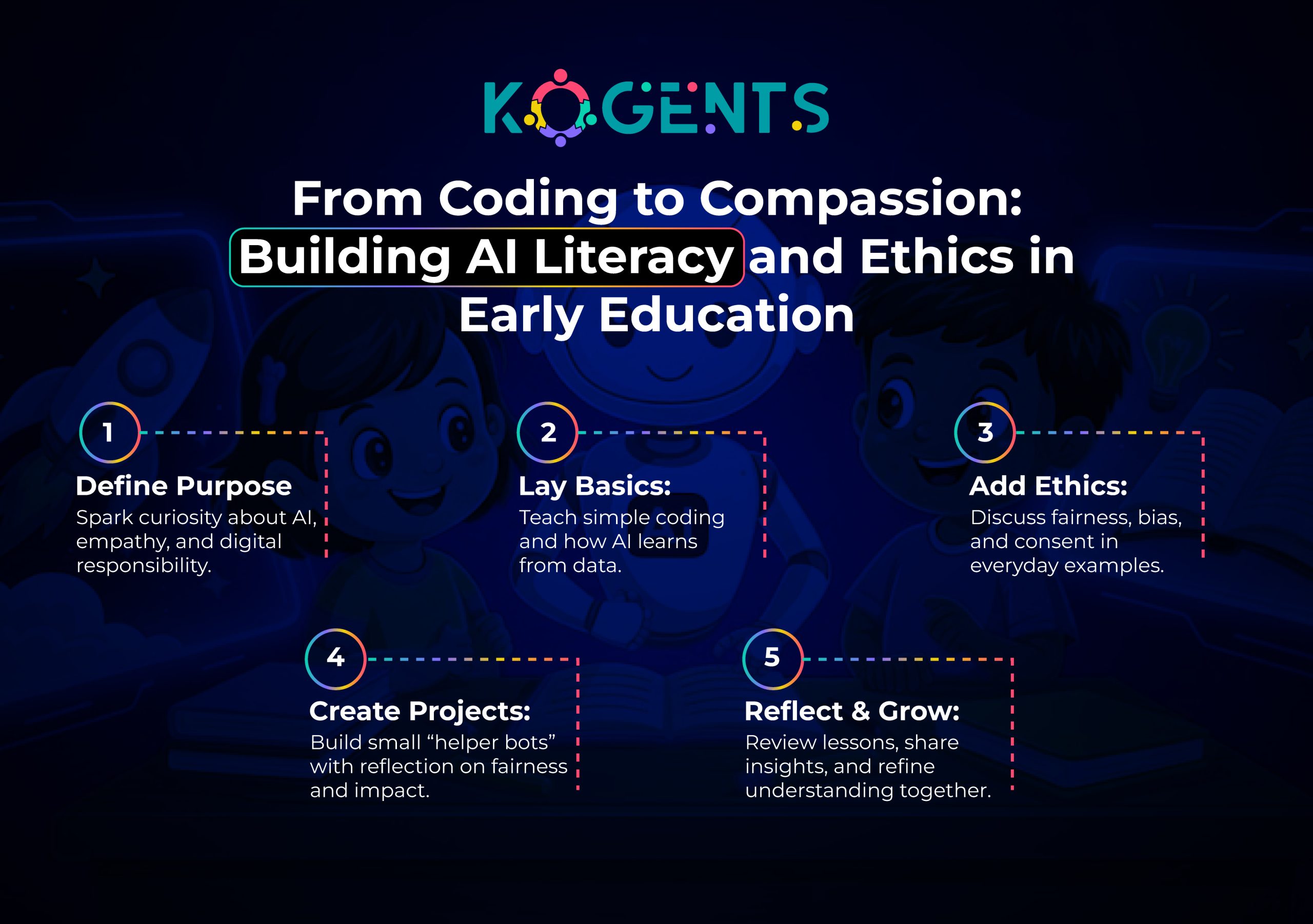

In this blog, we dive deep into the intersection of innovation and childhood, exploring the ethical implications of AI in primary education, the challenge of balancing technology and child development in schools, and how artificial intelligence ethics in elementary learning must become core to our planning.

Key Takeaways

- Ethical deployment of AI in elementary settings hinges on safeguarding children’s cognitive development, promoting autonomy, and preventing over-reliance.

- Data privacy for minors and transparent, human-centred algorithms are non-negotiables in classroom technology integration.

- Bias in educational algorithms can replicate existing inequalities; equity must be built in from the start.

- Teachers must be equipped with digital literacy in early education and an understanding of AI learning ethics for kids, as well as AI agents for higher education to act as guides, not just operators of tools.

- Success lies not in replacing teachers or childhood, but in achieving responsible innovation in K–12 education, where technology amplifies, not replaces, the human and developmental dimension.

Why Innovation in Elementary Education Matters?

According to UNESCO,

“AI has the potential to address some of the biggest challenges in education today, innovate teaching and learning practices, and accelerate progress towards SDG 4”.

However, as children at this stage are in critical phases of childhood development, including physical, cognitive, emotional, and social domains, the introduction of AI-powered tools for education must be especially sensitive.

The notion of balancing innovation vs. childhood development in education becomes central.

Innovation in elementary education matters for several reasons:

- Early foundation – The elementary years set the groundwork for cognitive, emotional, and social development. Introducing advanced tools early can amplify positive outcomes or risk undermining them.

- Personalised learning – Elementary learners have varied paces and styles. AI can support differentiated instruction and help meet each child where they are.

- Teacher support – Teachers in primary grades face high demands. AI tools can assist with tracking progress, creating engaging content, and freeing time for one-on-one support.

- Global challenges – Many regions struggle with teacher shortages, large class sizes, and resource constraints. Innovation with AI offers a scalable way to help. But this must be done responsibly.

- Digital literacies – Early exposure to digital literacy in early education sets children up for later success in an AI-rich world. They must learn not just with AI, but about AI and its ethical dimensions.

Yet innovation is not a panacea. Without attention to responsible AI use, educational psychology, data privacy for minors, and the moral dimension of technology and childhood, innovation can become harmful.

The Ethical Imperative: What Does “Childhood” Mean in the Age of AI?

Before exploring ethics, we must ask what childhood truly means. It’s a time of growth, play, creativity, and emotional learning, not just optimization.

When AI enters classrooms, it must nurture, not narrow, these experiences.

The real challenge isn’t deploying technology, it’s preserving childhood while embracing innovation responsibly.

Core Ethical Dimensions for AI in Elementary Education

Here, we examine major ethical concerns and issues when deploying AI in elementary settings.

Data Privacy and Student Protection

One of the most immediate concerns when implementing AI at the elementary level is data privacy for minors.

Children’s data, whether academic, behavioural, biometric, or emotional, can be highly sensitive.

According to a review, “One of the biggest ethical issues surrounding the use of AI in K-12 education relates to the privacy concerns of students and teachers.”

Key issues include: what data is collected? Who has access? Is the data used for marketing? How long is it stored? Are children’s identities protected? Are parents informed and consent obtained?

- Principles of beneficence (promoting well-being) and non-maleficence (avoiding harm) from ethics literature must be applied:

- AI tools should promote the child’s well-being and avoid harm (e.g., data leaks, profiling, unwanted surveillance).

Algorithmic Bias and Fairness

AI systems are only as fair as the data and design behind them. In elementary settings, this translates to the risk that systems may reinforce biases: socio-economic, racial, gender, language learners, or children with special needs.

Note: As noted, “bias and fairness in AI algorithms” is a key ethical concern.

| Example: if a personalised system recommends slower tasks for children from lower-income areas, it may drain their growth potential. |

Equity must thus be engineered into AI deployments in schools.

Autonomy, Creativity, and Child Cognitive Development

Among the less-often discussed but equally potent risks: the impact of AI on the child’s autonomy, creativity, critical thinking, and development of agency.

A 2025 article notes: “One significant ethical concern is the potential for AI systems to limit children’s autonomy and creativity.”

When AI dictates learning pathways in a prescriptive manner, children may lose opportunities to explore, wonder, make mistakes, and engage with peers, all vital to development.

The field of educational psychology reminds us that child development is not simply about efficient learning but about discovery, metacognition, and formative mistakes.

Transparency, Explainability, and Teacher Oversight

- The “black box” nature of AI is problematic in a classroom context.

- Teachers, children, and parents must understand how an AI tool arrived at a recommendation or decision.

- The principle of explicability, that AI operations should be transparent and understandable, is central.

- Without teacher oversight and interpretability, decisions may be made too autonomously, reducing human supervision and accountability.

- This raises concerns around trust, professional judgement, and safeguarding children.

Equity, Access, and the Digital Divide

- Technology often amplifies existing inequalities if not carefully managed.

- The digital divide, differences in access to devices, connectivity, and supportive home environments, means that AI in elementary education may widen the gap if only some children benefit.

| Reminder: The notion of responsible AI use in classrooms must therefore include equity strategies. |

Emotional, Social, and Developmental Psychology Concerns

- Children in elementary school are developing not only intellectually but also socially and emotionally.

- Over-reliance on screens, reduced peer interaction, over-monitoring by AI, or surveillance of emotion may hinder social learning.

Caution: The field of educational and developmental psychology offers caution: child cognitive development is multi-dimensional, and tools must support holistic growth, not just test scores.

Balancing Technology and Child Development in Schools

With the ethical dimensions clear, how do schools, teachers, administrators, and policymakers walk the tightrope between embracing innovation in elementary learning and safeguarding childhood?

Frameworks and Guiding Principles

Organizations such as OECD, UNESCO, and IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems have outlined principles of trustworthy AI: transparency, fairness, accountability, human-centred design, and inclusivity.

Schools can adapt these into practical policies: ensuring human oversight, validating systems for bias, building in child-friendly design, and ensuring data minimalism.

Teacher Training and Human-Centred Design

- A key pillar is equipping teachers not just to operate AI tools but to understand their ethical implications.

- Teacher professional development must include elements of AI learning ethics for kids, digital literacy in early education, and understanding how to integrate technology without losing the relational, human side of teaching.

Responsible AI Use in Classrooms

- Instead of replacing teachers or childhood experiences, AI should support them.

- The innovation vs childhood development balance means technology should serve pedagogy, not dominate it.

| Example: adaptive tools can free teachers to do more peer discussion, project-based learning, and play-based exploration. |

Monitoring, Evaluation, and Feedback Loops

Continuous monitoring is vital: Are AI tools actually improving learning outcomes? Are children’s social and emotional needs being met? Are biases emerging? Schools must build feedback loops, audit systems for unintended effects, and adapt accordingly.

The Role of Policy and Regulation

- Government policy, regulatory framework, and institutional governance matter.

- Without these, innovation may outpace safeguards.

Example: mandatory bias audits, data protection laws for minors, transparent vendor agreements, and ethical procurement of AI tools.

Case Studies

Some of the key notable AI in education examples are explained below:

Case Study 1: Adaptive Learning Algorithms in Elementary Grades

- In one I-Tech initiative, an elementary school district deployed an adaptive platform for Grades 3–5 that tracks reading comprehension and maths fluency.

- The system uses algorithms to customise tasks.

- Teachers found that students progressed faster, but also noticed that the algorithm sometimes produced monotonous, drill-based tasks, reducing peer interaction time.

- An internal audit flagged that the system recommended fewer enriched tasks for students labelled “slow learners” which raised fairness concerns.

- The school remedied this by introducing human-review checkpoints and ensuring enriched tasks for all children, balancing personalised learning with creative opportunities.

Lessons: Using personalised learning algorithms can raise efficiency, but without human oversight and fairness auditing, it may limit autonomy and creativity.

Case Study 2: AI Tutoring System with Ethical Safeguards

- A UK primary school piloted an AI-driven tutoring system for children needing extra support. The system provided interactive sessions with an AI avatar, tracked progress, and shared reports with teachers.

- The rollout ensured consent, transparency, teacher training, and emotional monitoring, resulting in a 12% reading score boost.

- Yet, students preferred teacher-led collaboration, leading to a balanced AI-plus-peer learning model.

Lessons: A blended model of AI + human interaction, with ethical safeguards and transparent design, supports both innovation and childhood development.

Case Study 3: Inclusive Education – AI Tools for Children with Special Educational Needs (SEN)

- An inclusive-education programme used AI interfaces to support children with reading comprehension difficulties.

- The design adopted a participatory strategy grounded in the “Capability Approach” (focusing on what children can do) and involved children, teachers, and technologists in design.

- The system improved engagement, offered differentiated scaffolding, and freed teacher time to focus on social-emotional support.

- However, challenges emerged around data consent, ensuring the algorithms did not pigeonhole children by ability, and maintaining flexibility.

Lessons: AI can enhance inclusion when carefully designed, but ethical vigilance remains critical.

Table: Ethical Dimensions vs Practical Implementation

| Ethical Dimension | Potential Risks in Elementary AI Deployment | Practical Mitigations / Best Practices |

| Data Privacy & Student Protection | Data breaches, profiling, and consent issues | Data minimisation, clear consent (parents/children), secure storage |

| Algorithmic Bias & Fairness | Reinforcing inequalities, disadvantaging groups | Bias auditing, representative data sets, equity-based design |

| Autonomy & Cognitive Development | Over-prescription, reduced creativity, loss of exploration | Design open-ended tasks, support teacher-led explorations, and monitor autonomy |

| Transparency & Explainability | “Black-box” decisions, teacher mistrust | User-friendly explanations, teacher involvement, human-in-the-loop decisions |

| Equity & Digital Divide | Unequal access, tribalisation of resources | Ensure universal infrastructure, offline options, and inclusive planning |

| Emotional/Social/Developmental Growth | Reduced peer interaction, over-monitoring | Blend AI with peer/group work, monitor social outcomes, and teacher engagement |

Conclusion

Well, we all know that AI in Elementary Education shouldn’t compete with childhood; it should protect and enhance it.

With ethical design and human-centered teaching, we can make technology serve learning, not replace it.

At Kogents.ai, we create AI solutions that nurture curiosity, creativity, and fairness in classrooms.

Our platforms empower teachers, safeguard children, and balance innovation with empathy.

Let’s build the future of ethical, child-centered AI together!

FAQs

What are the ethical issues of using AI in elementary schools?

Key issues include data privacy for minors, algorithmic bias and fairness, reduced autonomy and creativity in children, transparency and explainability of AI systems, equity of access and the digital divide, and ensuring that emotional, social, and developmental aspects of childhood are not compromised.

How does AI affect child learning and development in elementary education?

AI can personalise instruction, adapt learning tasks, provide immediate feedback, and help teachers manage differentiated learning. But it may also reduce opportunities for peer interaction, open-ended exploration, mistake-driven learning, and creative problem-solving—key components of child cognitive development and educational technology integration.

How can schools choose the best AI educational tools for elementary levels (commercial/investigational intent)?

Schools should evaluate tools based on: data privacy compliance, transparency of algorithms, teacher controllability, equity of access, ability to customise, and alignment with child developmental needs. Engage teacher input, pilot programs, and include children’s and parents’ voices. Compare solutions on features, cost, but also ethical maturity and pedagogical fit.

What teacher training is required to use AI tools in elementary classrooms responsibly?

Training should cover: digital literacy in early education; understanding of how AI algorithms work; pedagogy-first design; recognising bias; interpreting AI-generated insights; blending AI with human teaching; understanding the ethical implications of AI in primary education; and being able to explain AI outputs to students and parents.

How do policies and regulations address AI in K–12 settings?

Regulatory bodies like UNESCO, OECD, CD, and region-specific laws are increasingly focusing on trustworthy AI, data protection, child-safe technology, inclusive design,g,n and human-centred approaches. Schools should align with national laws regarding children’s data, procurement standards, and ethical frameworks.

How can equity and access be maintained when AI tools are introduced in elementary education?

Ensure all children have hardware, connectivity, supportive home/ school infrastructure; include offline/low-tech fallback options; monitor for disproportionate benefits or harms; conduct bias audits; design for inclusive use; involve under-resourced communities in planning. The goal is to avoid amplifying the digital divide.

Kogents AI builds intelligent agents for healthcare, education, and enterprises, delivering secure, scalable solutions that streamline workflows and boost efficiency.