Enterprise AI Development that Reduces Integration Risk for Global Teams

Summary:

Do you know that the new era of enterprise AI development is not defined by who can build the smartest model, but by who can integrate AI securely, seamlessly, and globally?

For entrepreneurs, solopreneurs, and enterprise leaders managing distributed teams, the challenge is no longer whether AI works; it’s whether it works everywhere, across multiple systems, time zones, and compliance frameworks.

AI pilots often thrive in isolation but stumble when integrated across ERP systems, CRMs, or regional data infrastructures.

This is where the true craft of AI development for enterprises begins: reducing integration risk without slowing innovation.

In this comprehensive guide, we’ll explore how custom enterprise AI development empowers organizations to scale across borders safely, ethically, and efficiently. So, let’s delve into this guide.

Key Takeaways

- Understand the true nature of integration risk in enterprise AI projects.

- Learn the complete enterprise AI development lifecycle, from discovery to global deployment.

- Explore actionable methods to mitigate integration risk across regions and tech stacks.

Compare in-house vs vendor-led approaches in one concise table. - Study credible enterprise AI solutions development case studies with measurable ROI.

- End with strategic imperatives to future-proof your AI roadmap.

The Hidden Bottleneck: Integration Risk in Enterprise AI

Integration risk is the silent killer of enterprise AI success. While teams often celebrate model accuracy or training speed, the true battlefield lies in how well AI integrates with existing systems, finance, HR, supply chain, customer service, or compliance databases.

For enterprise-level AI development, even a minor schema mismatch or version conflict between APIs can lead to cascading failures.

This is a challenge the best agentic AI company models anticipate and mitigate through modular orchestration and adaptive governance.

Consider global rollouts where teams use different data standards, privacy laws, or latency thresholds; these variations multiply integration risk exponentially.

Large enterprise AI development projects often fail not because models are poor, but because integration pipelines break under real-world complexity.

As systems evolve, dependencies drift, and governance frameworks tighten, AI must adapt continuously.

This is why modern AI integration for legacy systems demands modular architecture, strict governance, and resilient orchestration layers, the backbone of reliable global AI deployment.

The Enterprise AI Development Lifecycle

A well-structured enterprise AI development lifecycle ensures AI systems progress from concept to global scalability with minimal friction.

Below is a narrative walkthrough of each stage and how integration risk can be mitigated throughout the process.

Stage 1: Discovery and Strategy

- This phase involves defining business objectives, identifying AI opportunities, and evaluating system readiness.

- Entrepreneurs should perform a data and systems audit to assess integration touchpoints.

- Understanding where APIs, databases, and workflows intersect reveals early risk zones.

Stage 2: Data Architecture and Preparation

- Once objectives are clear, teams build the data pipelines and architectures to fuel AI.

- Integration risk emerges when data originates from multiple global systems.

- To mitigate this, adopt standardized data schemas, establish ETL consistency (e.g., Apache Airflow, Kafka), and create metadata catalogs for traceability.

Stage 3: Model Development and Experimentation

- Here, machine learning (ML) and deep learning models are built, tested, and refined.

- While technical accuracy is key, integration foresight matters equally.

- Define consistent model input-output schemas, set up feature stores, and ensure models remain modular, capable of integrating with multiple business functions.

Stage 4: Testing and Validation

- AI must be validated both technically and operationally.

- Conduct integration sandbox tests simulating real-world environments: regional data rules, latency, and system load.

- This stage also validates AI governance and compliance, security, and explainability (XAI) requirements to ensure compliance across jurisdictions.

Stage 5: Enterprise AI Deployment

- The deployment phase is where many teams encounter friction.

- Using a microservices architecture and containerization (Docker, Kubernetes) reduces dependency conflicts.

- Each AI service can be updated independently, supporting scalable enterprise AI development across global teams.

- Incorporate CI/CD pipelines and blue-green or canary deployments for safe rollouts.

Stage 6: Integration and Scaling Across Regions

- After successful deployment, scaling AI globally introduces new integration risks: latency, localization, and cross-region compliance.

- Adopt federated learning for sensitive data, regional caching for latency reduction, and cloud-agnostic orchestration to ensure resilience in hybrid or multi-cloud setups.

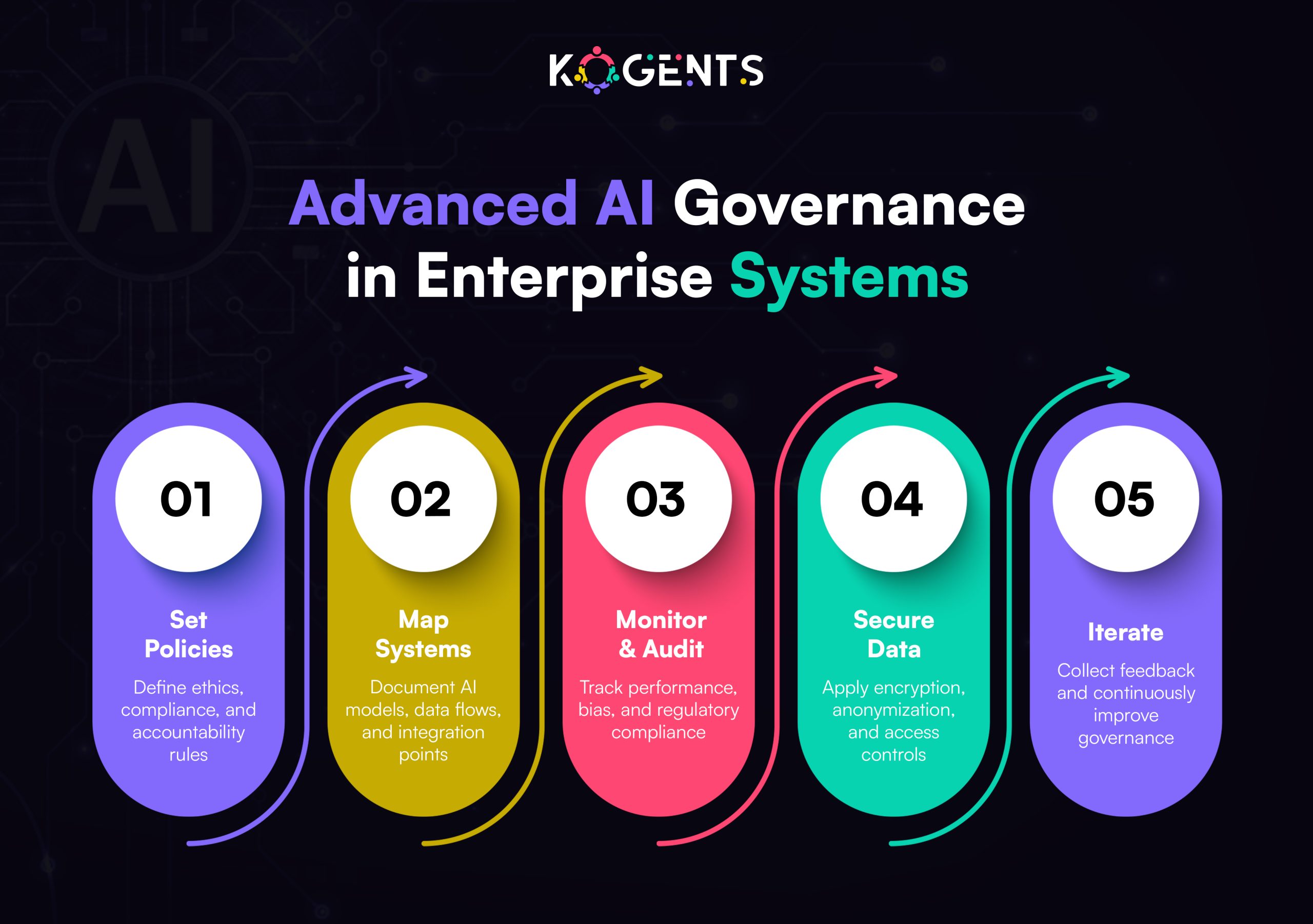

Stage 7: Monitoring, Governance, and Continuous Improvement

- AI never “ends.” Models drift, data evolves, and systems change.

- Set up monitoring pipelines, AIOps dashboards, and feedback loops.

- Monitor data drift, bias, and performance.

- Integration risk decreases when governance becomes continuous, not reactive.

- Each stage feeds into the next, reinforcing an iterative ecosystem, one where integration readiness is designed, tested, and matured before global scaling begins.

Integration Risk Mitigation Strategies Explained

Integration risk mitigation is not a checklist; it’s a mindset.

To design resilient enterprise AI, entrepreneurs and solopreneurs must embed mitigation practices across people, process, and technology layers.

1. Design for Modularity

- Adopt API-first design principles.

- Every AI module, whether NLP, computer vision, or predictive analytics, should communicate through well-documented APIs.

- This allows teams in different regions to build independently while maintaining interoperability.

2. Implement Version Control Across Systems

- Version drift can cripple integration.

- Use schema versioning, feature store registries, and backward-compatible APIs.

- This ensures older systems continue functioning even as new models roll out.

3. Build with Compliance in Mind

- Global teams face varying data privacy laws (GDPR, HIPAA, CCPA).

- Integrate data masking, encryption, and access control at the architecture level.

- Secure enterprise AI development is not optional; it’s a compliance mandate.

4. Use Federated or Hybrid Learning

For highly regulated industries, federated learning enables AI model training across distributed datasets without moving data across borders, a crucial practice for enterprise AI platform development in healthcare and finance.

5. Embrace Continuous Monitoring

- Integration success depends on ongoing observability.

- Tools like Prometheus, Grafana, and MLflow allow teams to detect anomalies, drift, or bottlenecks in real-time.

6. Design for Failure

- Global AI systems should fail gracefully.

- Use fallback mechanisms, circuit breakers, and redundancy protocols.

- When a regional system fails, others must continue operating.

Key Note: By embedding these strategies throughout the lifecycle, teams reduce integration incidents, maintain consistent performance, and ensure system longevity, transforming AI from an experimental tool into a global operational advantage.

Vendor vs In-House: The Strategic Decision

Choosing between in-house custom enterprise AI development and enterprise AI consulting & development vendors determines not just cost, but control, scalability, and long-term risk.

| Decision Factor | In-House / Custom Enterprise AI Development | Vendor / Third-Party Enterprise AI Consulting & Development |

| Control & Customization | Full control over architecture, data, and IP. Ideal for proprietary systems. | Prebuilt modules reduce setup time but limit deep customization. |

| Integration Risk | Higher initially; managed internally via CI/CD, testing, and documentation. | Lower short-term risk but potential long-term vendor dependency. |

| Cost Profile | High upfront investment (CapEx) but lower recurring cost. | Lower startup cost (OpEx) but possible recurring licensing fees. |

| Time to Market | Slower initially; faster for future iterations. | Rapid deployment with existing frameworks and tools. |

| Compliance & Security | Complete ownership of compliance implementation. | Vendor must align with your governance frameworks. |

| Scalability | Scales deeply if infrastructure is modular. | Scales faster but depends on the vendor’s tech stack. |

| Maintenance | Internal teams manage updates and bug fixes. | Vendor-driven; governed by SLAs and support terms. |

For entrepreneurs and solopreneurs, a hybrid approach often works best, using vendors for foundational infrastructure (like MLOps platforms) while building proprietary models in-house to retain control and innovation.

Real-World Case Studies

Case Study 1: Guardian Life Insurance — Reducing Integration Friction

Guardian Life modernized its analytics stack using enterprise AI software development practices.

By containerizing all models and enforcing strict API contracts, the company reduced integration failures by 35% across global branches and cut deployment time from weeks to days.

Case Study 2: Italgas — AI-Powered Predictive Maintenance

Italgas adopted a scalable enterprise AI development approach with edge inference for real-time monitoring of pipelines.

Using federated learning, the company minimized cross-border data transfer while complying with EU privacy mandates, saving €4.5M annually through predictive maintenance.

Strategic Imperatives for Global AI Success

As AI adoption matures, entrepreneurs must evolve from experimentation to strategic execution. Here are the strategic imperatives shaping the next wave of enterprise AI automation development:

- Adopt Compound AI Architectures: Blend LLMs, predictive analytics, and agentic AI frameworks like LangChain to create flexible, compound systems that integrate easily across functions.

- Prioritize Governance and Transparency: Build governance APIs that monitor bias, explainability, and compliance at runtime, not after deployment.

- Invest in Interoperability: Use open standards (ONNX, MLflow, Apache Kafka) to ensure future compatibility.

- Foster AI Maturity Culture: Encourage teams to document lessons, share integration templates, and track adoption metrics.

- Think Globally, Act Modularly: Every new region should plug into a predefined architecture template, minimizing reinvention and ensuring uniform quality.

Wrapping Up!

In the interconnected global landscape, enterprise AI development is both a technological and organizational discipline.

Reducing integration risk isn’t just about protecting systems; it’s about empowering innovation, ensuring reliability, and uniting global teams under a shared digital framework.

Whether you’re a solopreneur exploring AI-driven automation or an enterprise leader scaling across continents, the path to sustainable AI success begins with intelligent integration.

Know that Kogents.ai is here to make your experience risk-free and an enterprise-grade AI deployment. Give us a call at +1 (267) 248-9454 or drop an email at info@kogents.ai.

FAQs

What is enterprise AI development?

It’s the process of creating scalable, secure AI systems designed to integrate into complex enterprise ecosystems.

How does enterprise AI differ from consumer AI?

Enterprise AI development emphasizes governance, integration, and compliance across distributed systems — unlike consumer AI, which focuses on individual user experience.

What are the main phases of enterprise AI development?

Discovery, data architecture, model development, testing, deployment, scaling, and continuous governance.

Why is integration risk so critical?

Integration failures lead to downtime, compliance breaches, and lost trust — even if the AI model itself performs well.

What tools help manage enterprise AI integration?

Apache Airflow, MLflow, Kubernetes, TensorFlow Serving, and MLOps tools like Kubeflow.

How can solopreneurs apply enterprise AI strategies?

By adopting modular design, cloud-based AI services, and using prebuilt APIs for faster scaling.

What is federated learning, and why is it useful?

It’s a privacy-preserving method that trains models on distributed data sources without moving the data, ideal for regulated industries.

What role does AI governance play?

Governance ensures accountability, fairness, and compliance, critical for secure enterprise AI development.

What is the ROI of enterprise AI deployment?

Returns include reduced manual workload, lower integration costs, improved compliance, and faster innovation cycles.

What’s the future of enterprise AI?

The future lies in enterprise generative AI development, where intelligent agents autonomously coordinate workflows, guided by strong governance frameworks.

FAQs

It’s the process of creating scalable, secure AI systems designed to integrate into complex enterprise ecosystems.

Enterprise AI development emphasizes governance, integration, and compliance across distributed systems — unlike consumer AI, which focuses on individual user experience.

Discovery, data architecture, model development, testing, deployment, scaling, and continuous governance.

Integration failures lead to downtime, compliance breaches, and lost trust — even if the AI model itself performs well.

Apache Airflow, MLflow, Kubernetes, TensorFlow Serving, and MLOps tools like Kubeflow.

By adopting modular design, cloud-based AI services, and using prebuilt APIs for faster scaling.

It’s a privacy-preserving method that trains models on distributed data sources without moving the data, ideal for regulated industries.

Governance ensures accountability, fairness, and compliance, critical for secure enterprise AI development.

Returns include reduced manual workload, lower integration costs, improved compliance, and faster innovation cycles.

The future lies in enterprise generative AI development, where intelligent agents autonomously coordinate workflows, guided by strong governance frameworks.

Kogents AI builds intelligent agents for healthcare, education, and enterprises, delivering secure, scalable solutions that streamline workflows and boost efficiency.