How Medical diagnostics AI improves accuracy and speeds regulatory approval

Summary:

Healthcare is at a tipping point where medical diagnostics AI is not just enhancing precision but fundamentally reshaping how new tools earn regulatory trust.

Hospitals, startups, and solopreneurs alike are discovering that AI systems capable of interpreting scans, lab data, and genomic signals can shorten diagnostic times, reduce human error, and even accelerate FDA or CE mark approvals.

For entrepreneurs, this convergence means something powerful: the same machine learning models that improve diagnostic accuracy can simultaneously generate the structured evidence regulators require.

What used to take years, clinical trials, validation cycles, and audit documentation can now be expedited through built-in explainability, audit trails, and real-world performance monitoring.

This article explores how AI in medical diagnostics drives both precision and compliance, helping innovators transform algorithms into trusted, market-ready medical devices.

Decoding the Term: Medical Diagnostics AI

AI in medical diagnostics refers to the use of machine learning, deep learning, and AI agents for healthcare automation to detect, classify, or predict disease states from clinical data.

These AI diagnostic tools range from image-analysis systems in radiology to genomic predictors, lab test analyzers, and multimodal platforms that merge imaging, electronic health records (EHR), and biomarkers.

Unlike static rule engines, AI-based diagnostic systems learn patterns from large labeled datasets, X-rays, CTs, MRIs, pathology slides, or molecular data.

The models then generate probabilities or alerts indicating potential abnormalities.

Common technologies include:

- Convolutional neural networks (CNNs) for medical imaging segmentation and classification

- Transformer and attention models for pathology or textual EHR interpretation

- Ensemble models for predictive diagnostics combining labs and imaging

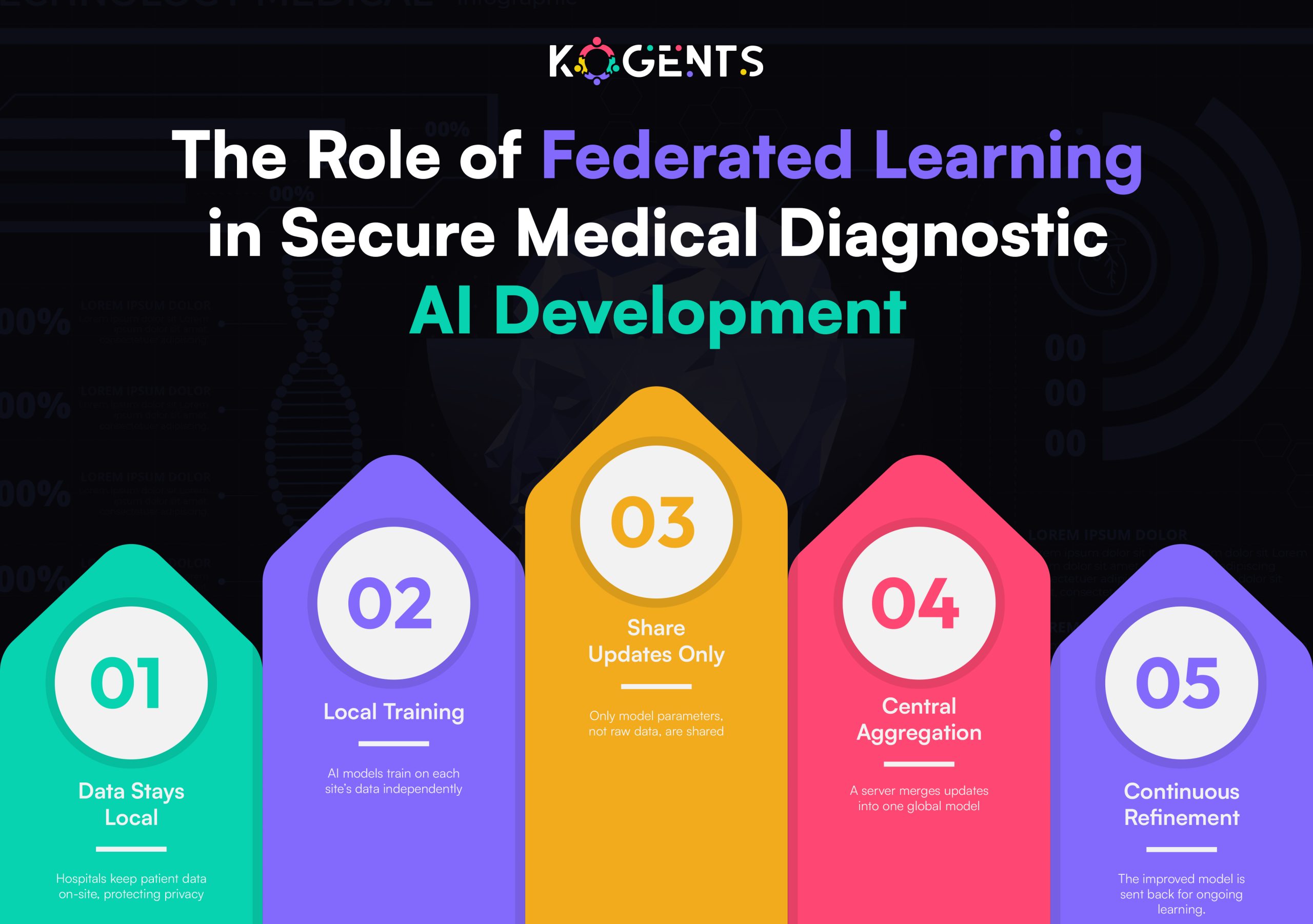

- Federated learning and privacy-preserving AI for cross-hospital data without violating HIPAA or GDPR

Today, such systems are increasingly regulated as Software as a Medical Device (SaMD). That shift means accuracy and validation are not academic exercises; they’re legal and commercial prerequisites.

How AI Improves Diagnostic Accuracy?

Diagnostic precision is the cornerstone of clinical AI success.

Here’s how AI diagnostic systems deliver measurable accuracy gains compared to traditional workflows:

1. Pattern recognition beyond human perception

AI can detect faint patterns in imaging or molecular data, subtle radiomic features, or genomic variants that clinicians might miss.

Deep learning models trained on millions of examples reach high sensitivity and specificity, often outperforming radiologists in narrow tasks such as lung nodule or fracture detection.

2. Reduced inter-reader variability

Human diagnosticians vary in interpretation, and AI doctor diagnosis systems bring consistency, applying the same learned criteria across every case.

In studies on chest X-rays and mammography, AI models cut variability by more than 50%, improving diagnostic reliability.

3. Robust validation and cross-site generalization

Modern AI agents employ external validation using datasets from multiple hospitals, scanner types, and demographics.

This ensures generalization and prepares evidence for regulatory review, since the FDA now expects performance across subgroups and devices.

4. Quantitative metrics: ROC, AUC, F1, confusion matrix

AI’s accuracy isn’t anecdotal; it’s quantifiable. Metrics like ROC/AUC, F1 score, and precision-recall curves demonstrate statistical performance.

When benchmarked against gold-standard datasets (e.g., MedPerf, MIMIC-CXR), these numbers become the evidence base for approval submissions.

5. Continuous learning and drift detection

Drift detection systems measure when input data shifts (e.g., new scanner type or demographic mix).

Automatic alerts and retraining pipelines keep performance stable, ensuring real-world accuracy long after release.

Together, these mechanisms produce diagnostic agents that don’t just detect disease; they generate traceable, reproducible proof of their accuracy, fuel for regulatory success.

| Metric | What It Measures | Why It Matters for Regulatory Approval |

| Sensitivity | True positive rate | Ensures diseases aren’t missed; high sensitivity supports safety claims. |

| Specificity | True negative rate | Prevents false alarms; important for clinical reliability. |

| ROC / AUC | Model discrimination power | Quantifies ability to distinguish between conditions; key for FDA submissions. |

| F1 Score | Balance of precision and recall | Useful for imbalanced medical datasets. |

| Confusion Matrix | Overall prediction accuracy | Provides transparency and traceability in model evaluation. |

From Accuracy to Approval: The Regulatory Flywheel

Why does accuracy matter so much for regulatory approval? Because every metric, specificity, sensitivity, ROC/AUC, translates directly into the risk–benefit assessment that agencies like the U.S. Food and Drug Administration (FDA) or European Medicines Agency (EMA) perform.

Here’s how the “accuracy → approval” flywheel works:

1. Explainability builds clinical trust

- Regulators demand interpretability.

- Explainable AI (XAI) techniques, saliency maps, SHAP values, and attention overlays show why a model flagged an abnormality.

- These visual explanations improve clinician understanding and regulatory confidence.

2. Traceability satisfies SaMD requirements

- Every AI diagnostic agent must maintain audit trails: model version, dataset used, validation protocol, and performance metrics.

- Traceability allows reviewers to replicate results and ensures that updates remain compliant.

3. Bias and fairness documentation

- Accuracy across demographics is now mandatory.

- Regulators require reporting of subgroup performance (e.g., by sex, age, ethnicity).

- Demonstrating fairness and low bias speeds the approval review by preempting safety concerns.

4. Prospective and real-world validation

- FDA reviewers favor evidence beyond retrospective testing.

- AI agents that perform in prospective clinical trials or real-world deployments can submit stronger safety and efficacy data, shortening review cycles.

5. Post-market surveillance readiness

Under evolving frameworks, especially the FDA’s Predetermined Change Control Plan (PCCP), companies that design post-market monitoring and drift-control pipelines from day one gain approval faster because regulators can trust lifecycle safety.

Operational Pipeline Built for Approval

Entrepreneurs who bake compliance into their AI pipelines from the start save months of regulatory rework.

A well-architected medical AI diagnosis platform follows this operational blueprint:

1. Data governance & privacy

- De-identification and encryption to meet HIPAA/GDPR

- Federated learning or on-premise training for privacy-preserving development

- Audit logs record every data access.

2. Standardization & interoperability

- Use of DICOM, HL7, and FHIR standards for imaging and EHR data

- Integration with PACS and clinical workflow tools

- Data normalization and version control for consistent model input

3. Model development & validation

- Balanced datasets and cross-validation to avoid overfitting

- External multi-site validation and hold-out cohorts

- Reporting of sensitivity, specificity, ROC/AUC with 95% confidence intervals

4. Explainability & uncertainty management

- Implement saliency maps, feature importance ranking, or attention visualization

- Provide confidence intervals or uncertainty scores in outputs

5. Documentation & submission readiness

- Design history files, validation protocols, and clinical performance summaries

- Clear alignment with IMDRF and SaMD documentation standards

6. Change control & monitoring

- Built-in version control for models and data

- Drift detection alerts

- Defined boundaries for retraining under the PCCP framework

Key Point: By aligning engineering with regulatory science, entrepreneurs can accelerate from prototype to market-cleared product without costly rewrites.

High-Impact Diagnostic Use Cases

Radiology and Imaging AI

AI-driven radiology solutions like Aidoc and Viz.ai detect critical conditions, stroke, hemorrhage, and pulmonary embolism within minutes.

These AI diagnostic imaging systems reduce review times and have achieved multiple FDA 510(k) clearances.

Their success stems from rigorous multi-site validation, real-time alerting, and seamless PACS integration.

Digital Pathology and Histopathology

Deep learning diagnostics in pathology analyzes gigapixel whole-slide images to identify tumors, grade cancer severity, or quantify biomarkers.

FDA-cleared systems such as Paige.AI demonstrate that automated histopathology can meet or exceed human accuracy with documented reproducibility, key for regulatory confidence.

Genomics and Precision Medicine

AI in genomic diagnostics interprets variant significance, predicts disease risk, and supports personalized treatment planning.

Companies like Tempus AI and Sophia Genetics use multimodal fusion (genomics + imaging + EHR) to reach higher predictive power and regulatory-grade evidence.

Cardiology and Digital Stethoscopes

Eko Health’s AI-enabled stethoscopes analyze heart sounds to detect murmurs or arrhythmias.

The combination of signal processing and deep learning achieved FDA clearance, showing that even portable diagnostic devices can meet regulatory standards if the evidence is rigorous.

Laboratory and Biomarker Analysis

AI in lab diagnostics automates the detection of abnormal blood cell morphology, predictive analytics for infection risk, and anomaly detection in chemistry panels.

These tools improve lab throughput and accuracy, forming an evidence base for CLIA-aligned validations.

Telemedicine and Point-of-Care Diagnostics

Portable devices using AI-enabled diagnostic tools, from smartphone skin lesion detectors to handheld ultrasound, bring accurate screening to remote health monitoring areas.

As long as models are validated and explainable, regulators are increasingly open to decentralized AI diagnostics.

Challenges & Limitations

Even the most sophisticated AI diagnostic tools face technical and regulatory hurdles.

Data bias & generalization

- AI models can underperform on populations not represented in training data.

- Regulators scrutinize demographic subgroup results.

- Addressing bias through balanced sampling and fairness metrics is now a prerequisite for clearance.

Model drift & lifecycle management

- Post-deployment, real-world data often diverges from training distributions.

- Without drift detection and PCCP plans, accuracy degrades and compliance risks emerge.

Black-box opacity

- Complex deep neural networks can lack transparency.

- Without explainable AI, clinicians hesitate to trust predictions, and regulators may delay approval pending interpretability evidence.

Integration complexity

Hospitals rely on legacy EHRs and PACS systems; interoperability gaps can stall adoption. Entrepreneurs must invest early in standards compliance (FHIR, HL7, DICOM).

Regulatory uncertainty

- Frameworks evolve quickly.

- The FDA and EU’s IVDR now require ongoing monitoring, not one-time approval.

- Startups must budget for lifecycle compliance, not static submissions.

Cybersecurity & data privacy

- AI diagnostic software is still subject to medical-device cybersecurity rules.

- Encryption, authentication, and privacy safeguards must be built into design documentation.

Case Study Spotlight: Aidoc & Eko Health

Aidoc — Accelerating AI Doctor Diagnosis and Approval

Aidoc’s imaging AI platform analyzes CT scans to flag critical findings like pulmonary embolism and hemorrhage.

By combining deep learning, multi-site validation, and workflow integration, Aidoc secured over a dozen FDA 510(k) clearances.

Their approach, continuous monitoring, audit logging, and transparent validation reports, became a template for how diagnostic AI can both improve accuracy and satisfy regulators quickly.

Eko Health — AI-Enabled Cardiac Diagnostics

Eko Health integrates AI algorithms with digital stethoscopes to detect cardiac abnormalities. Each version of its model underwent prospective trials and external validation.

FDA clearance was granted because Eko documented bias analysis, sensitivity/specificity, and a robust post-market update plan, demonstrating how explainability and lifecycle management accelerate approval.

Both cases underscore a truth: AI companies that treat accuracy, transparency, and compliance as coequal goals reach the market faster and with greater trust.

Future of AI Diagnostics That Speeds Approval

The next generation of AI diagnostic agents will make approval even faster and safer.

- Federated learning and privacy-preserving collaboration

Hospitals can jointly train models without exchanging raw data, creating larger, more diverse datasets for validation, ideal for the FDA’s real-world evidence (RWE) requirements. - Standardized benchmarking frameworks

Initiatives like MedPerf and precisionFDA will provide reproducible performance benchmarks, reducing the need for redundant validation studies. - Adaptive regulatory pathways

The FDA’s PCCP and EU adaptive frameworks allow controlled model updates without full re-submission, enabling continuous improvement. - Multimodal and causal AI models

By integrating imaging, genomics, and clinical data, these models improve sensitivity and specificity, yielding stronger clinical evidence per study. - Explainability-by-design architectures

Next-gen agents will embed interpretability natively, producing self-auditing outputs that regulators can review instantly.

Conclusion

AI in medical diagnostics is proving that automation can enhance, not replace, human judgment, turning radiology, pathology, and cardiology into data-driven disciplines rooted in measurable accuracy and transparent oversight.

If your next innovation aims to detect disease faster, secure approval sooner, and inspire clinician trust, start by embedding explainability, validation, and monitoring into your design.

Then partner up with Kogents AI by calling us at +1 (267) 248-9454 or dropping an email at info@kogents.ai.

FAQs

What makes AI in medical diagnostics different from standard analytics?

Diagnostic AI directly influences patient care and therefore qualifies as a medical device (SaMD), subject to regulatory oversight and clinical validation.

How does improved accuracy lead to faster regulatory approval?

Strong sensitivity/specificity and well-documented validation simplify the FDA’s risk–benefit assessment, shortening review cycles.

What are the key FDA pathways for diagnostic AI?

Most products follow 510(k), De Novo, or PMA pathways depending on risk. Clear validation data and explainability accelerate all three.

What is a Predetermined Change Control Plan (PCCP)?

It’s an FDA mechanism allowing defined post-market model updates without full re-submission—crucial for continuous-learning AI systems.

How can startups ensure their AI generalizes across hospitals?

Use multi-site data, external validation, and domain adaptation to prove consistent performance across devices and demographics.

What documentation speeds regulatory clearance?

Comprehensive validation reports, audit logs, bias analyses, and explainability evidence aligned with IMDRF SaMD guidelines.

How does explainable AI affect clinician adoption?

Saliency maps or feature-attribution visuals let physicians see why the model flagged a case, increasing trust and compliance.

Can diagnostic AI be updated after approval?

Yes—if you define update boundaries in your PCCP and maintain version control with real-world monitoring.

What are common pitfalls delaying approval?

Insufficient external validation, unreported bias, missing audit trails, or lack of post-market surveillance plans.

How can solopreneurs compete with large medtech firms?

By focusing on niche diagnostic problems, using open datasets for validation, integrating explainability from day one, and partnering early with regulatory consultants.

Kogents AI builds intelligent agents for healthcare, education, and enterprises, delivering secure, scalable solutions that streamline workflows and boost efficiency.